When Weather Gets Bad

It can be hard to tell a conversation-led story about good weather. So, what happens when the weather gets bad and might be lift threatening?

Here, I’ve collected some work samples that show how a conversation-led voice experience can provide users with the information they need to make really big decisions when faced with life-threatening weather.

The Weather Channel is at its best when helping people during major storms. Until my team came along, voice had never been part of storm coverage. Knowing that users rely on smart speakers for information, my team and I wanted our voice apps to help in new and novel ways.

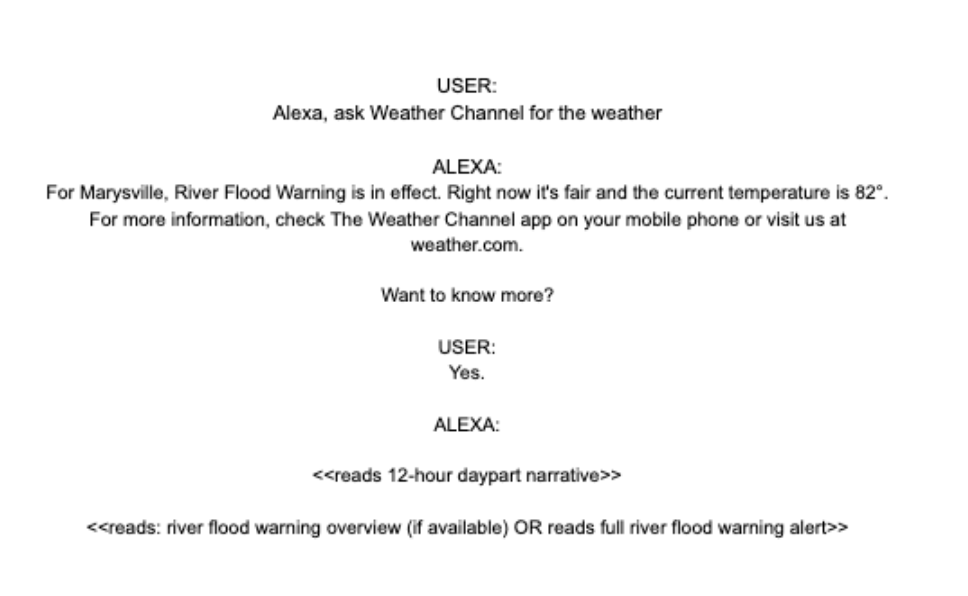

An alert from The National Weather Service:

A National Weather Service (NWS) alert can be anything from a Small Craft advisory to a hurricane or tornado warning.

Communicating these to users is then totally straight-forward, because you don’t want to give something that a general user might not understand (i.e. a small craft advisory) the same weight as a tornado warning.

These alerts can be life-saving. I worked with the developer to put the most severe alerts (i.e. tornado or hurricane warnings) first. So that the user hears the most important piece of information first, before the app moves on to answering the user’s question. Less urgent alerts, like a small craft alert, are played after the user’s question is answered.

In all cases, users are given the chance to hear the full alert message. From a quality perspective, this isn’t so ideal, because The Weather Channel, like all weather providers, gets this data from the NWS with no ability to format or rewrite it.

If the user says ‘yes’ to hear the alert, it will cause the bot to read the full statement from the NWS. Sometimes, these are well-written and easy for a voice assistant to read. At other times, they’ve got a sloppy sentence structure and / or weird punctation. This will trip up Alexa and basically any other voice assistant. But, users in testing told us that that’s ok. “I can always tell Alexa to stop,” said one user.

Hurricane Cindy: Should I Stay or Should I Go?

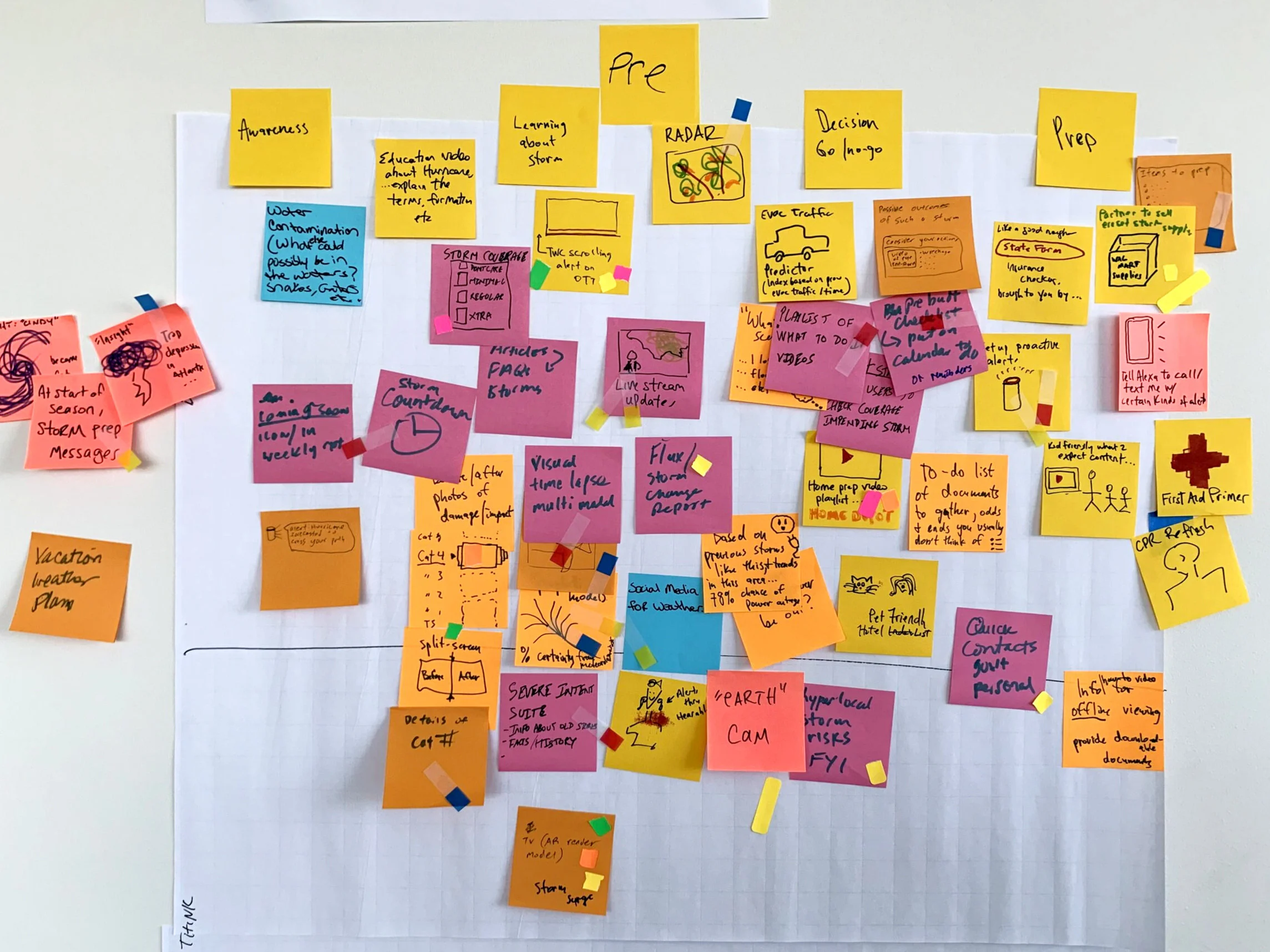

To work on conversation-first, smart speaker concepts for severe weather, I staged and facilitated a multi-day design thinking. Using the personas of ‘Floyd’ and ‘Carla,’ I crafted a scenario where a family lived in the potential path of a dangerous hurricane. They were preparing for ‘Hurricane Cindy’ and were using their smart speaker for hands-free updates throughout the process. I also urged the eam to create a persona for ‘Cindy,’ since named storms act in unpredictable ways that would determine how a family might prepare. So, the team had to decide where Cindy made landfall, how strong it was at landfall, whether it moved in-land and became a thunderstorm, or whether it dragged along the coast and created more havoc.

Map the Scenario.

I used Scenario Maps to understand the users current state and pain points. By working through what we thought ‘Floyd and Carla’ might think, feel, and do, I got the team to highlight areas where a Weather Channel smart speaker app could support their needs or solve their problems in unique or novel ways.

Big Ideas.

Using the As-Is Scenario Map, I got the team to vote on solvable pain points and then brain storm big ideas to help solve these problems. No judgment on ideas. Just generate as many ideas as possible and be ready to talk through how it addressed a problem and explain how the feature or product works.

Prioritize.

Using the big ideas with the most votes, we created a Prioritization Grid. Ideas are ranked by perceived user value and feasibility to implement quickly. Those within above and to the left of the red curve are the ideas chosen to move forward with as the team built prototypes.

“Show me the storm.”

Considering a cord cutter or a user with a smart display, I offered ‘show me the storm’ as an idea. I got the idea from the EarthCam cam skill for Alexa and the maps feature on a lot of in-flight entertainment centers, where I literally sit and watch my flight crawl toward its destination. It’s dreadful, but I and a lot of people do it, so I created a live-look experience, giving users a live video feed geolocated around the place where a hurricane is expected to make landfall. The user can see the level of the storm’s winds and rains, toggle to video, and read headlines from The Weather Channel newsroom.

For audio, I tried thinking about the word storm and how many meanings it might have. I also considered that, in a storm, the immediate threats is probably the most important information. Since ‘the storm' could really mean ‘what’s the weather right now?’ if it’s already in progress.

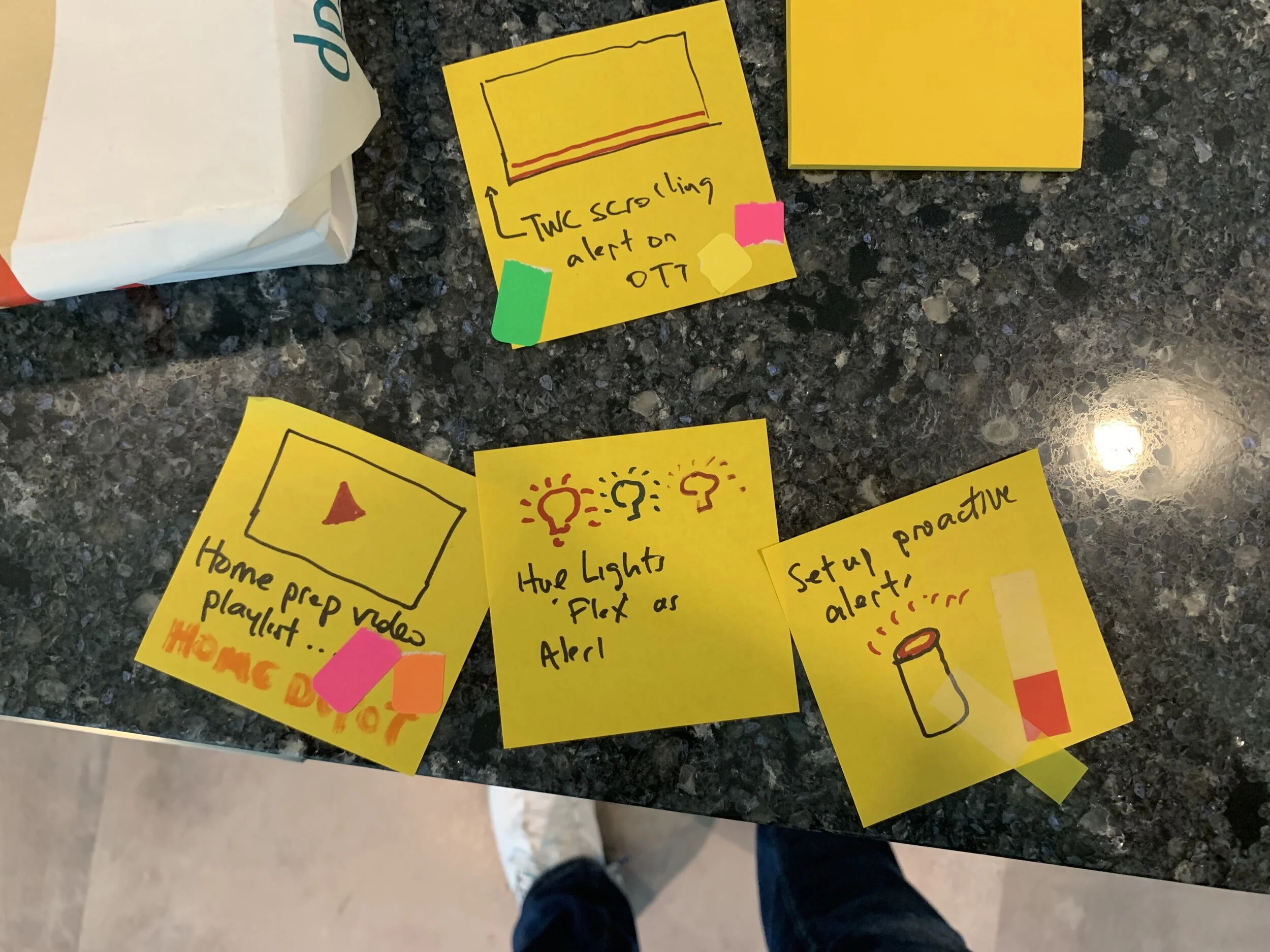

“Tell me the latest about the storm.”

In this concept, the user is asking for the latest on the storm. Since these events are covered around the clock, we decided to give cord cutters, OTT users, and smart display owners a livestream of any video currently on weather.com or The Weather Channel apps. A library of other videos is also accessible in this experience. And, the solution includes a scrolling footer with headlines and updates.

For audio, I tried thinking about the word storm and how many meanings it might have. I also considered that, in a storm, the immediate threats is probably the most important information. Since ‘the storm' could really mean ‘what’s the weather right now?’ if it’s already in progress.