Contact Center AI (CCAI)

Conversational interfaces have the power to transform a brand’s interactions with consumers.

While on assignment for a year and a half with Google, I led a cross-functional team of conversation designers, linguists, data scientists, and technologists in the creation of chat and voice solutions to replace legacy chat and voice solutions for a tier-1, US-based telecommunications provider.

It starts with data.

Conversational interfaces rely on language as input. If the bot can’t understand your users’ statements and process them accordingly, users will never experience the great thing you’ve designed.

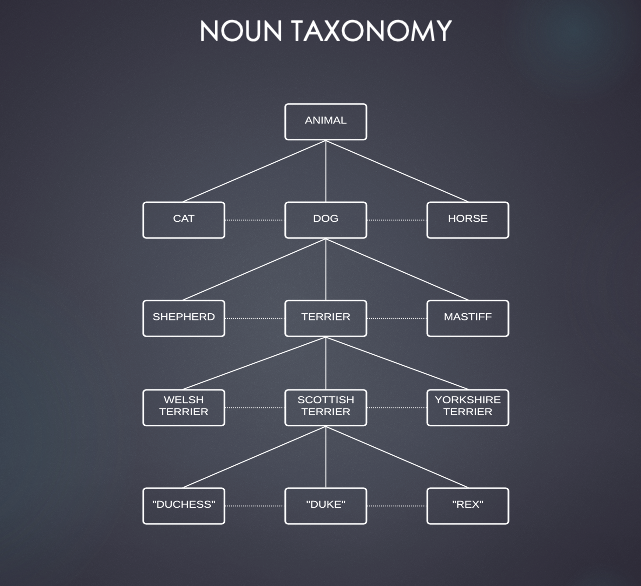

In the first stages of this project, I was able to oversee a team of linguists as they reviewed customer transcripts, identified head / contextual intents, and organized those intents into a useful taxonomy or Information Architecture for our bots.

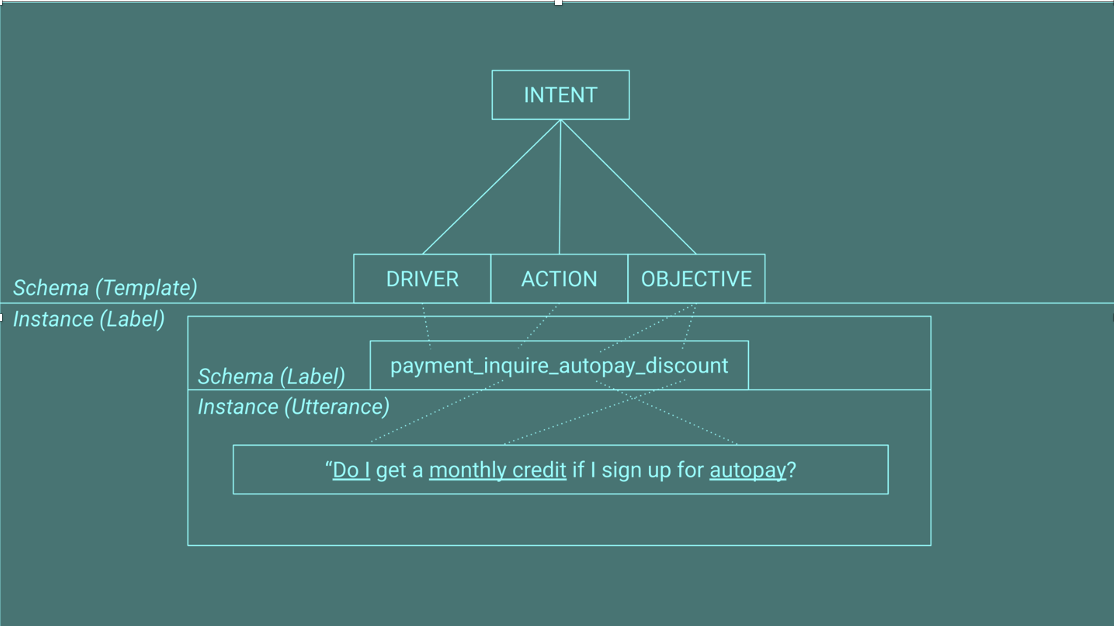

My team was able to create a system for data classification. Creating a information architecture or taxonomy defined as follows: a system of classification realizing a hierarchy of schema / instance relations.

Ours is a verb based taxonomy that incorporates nouns to provide a uniform but readable schematic for undestanding broad topic areas (drivers), user or agent actions, and user or agent objectives.

This approach became the Google CCAI standard for intent and TPU classification. In practice, this system saw our client realize a gain in F1 score from 0.56 to 0.83 for the chat bot within 2 sprints of launch

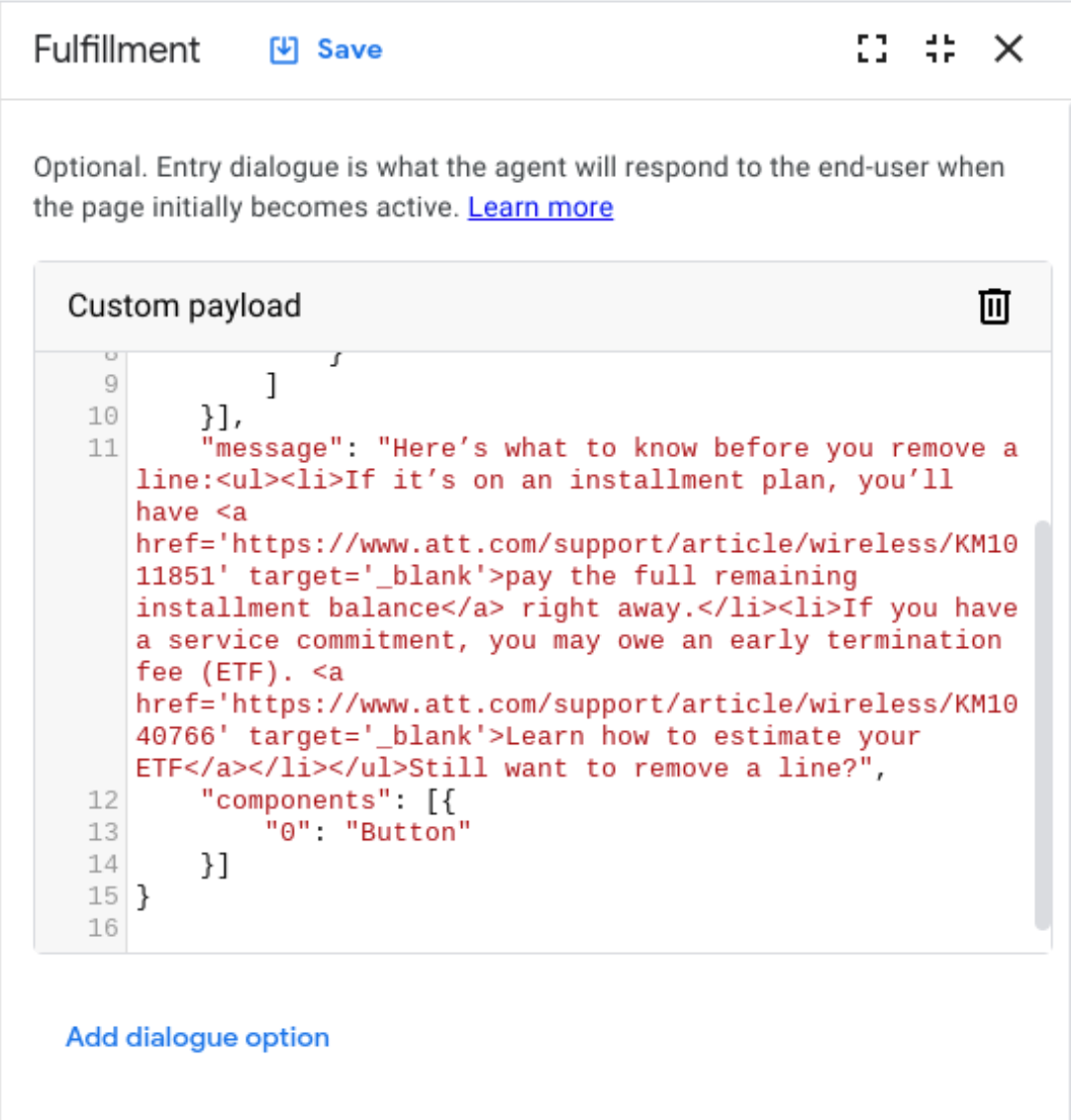

Bot design and development

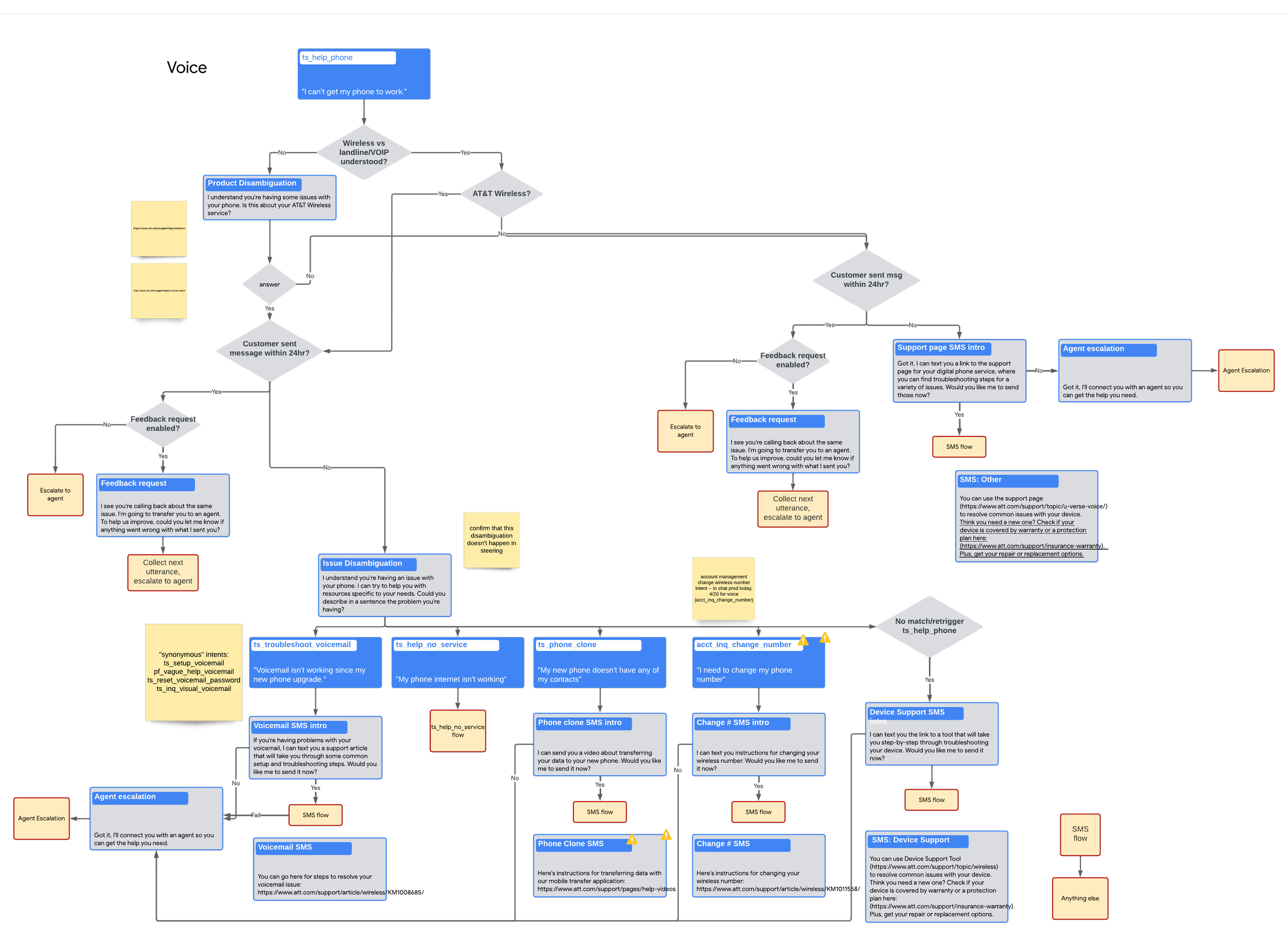

With taxonomy set, I led the team through the design and development of bot experiences. I facilitated the creation of design systems and oversaw bot development in Dialogflow CX.

Our bots met or exceeded Google benchmarks of 30% containment for billing inquiries and ~50% containment for account management inquiries.

Optimizations

Automation

Using a suite of Python based scripts, my team was able to partially automate conversation analysis and trasncript review. I introduced this process efficiency to enable the the team to spend more time during a sprint on trascript review. We ultimately identified problems that manual analysis would have obscured. And, we increased the number of transcripts processed by 300%.

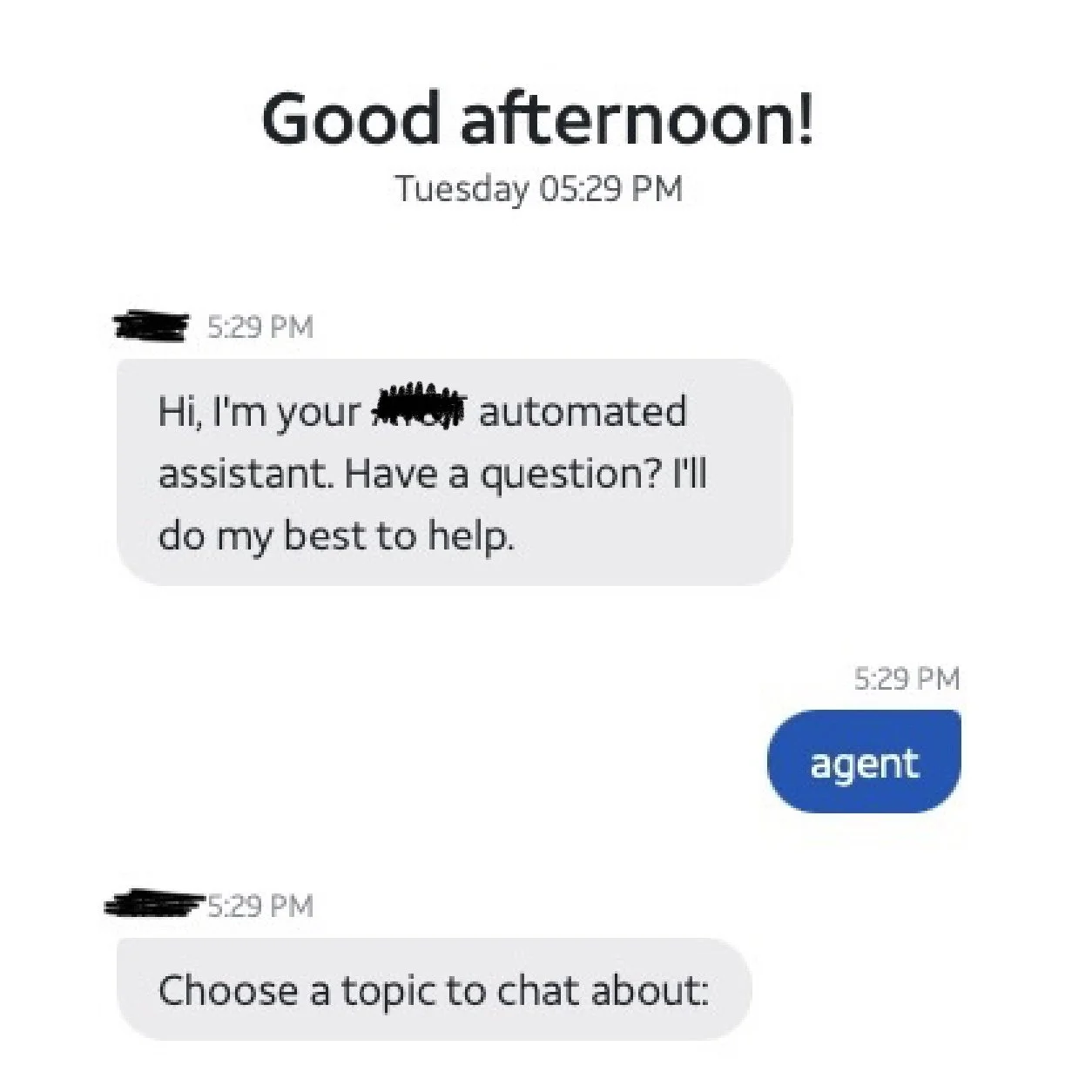

Chat abandonment

The automation scripts were able to perform position analysis, telling us where conversations were ending. We noticed that 20-25% of all conversations ended at the welcome message, indicating a major issue with how the bot is presenting its functionality to users. I worked with the client to develop alternatives and decrease abandonment by 10% at the first position.

Vague Intents

Our most invoked intent (by a lot) was something called bill-vague-help. This intent is matched whenever a user says something like ‘billing’ or ‘my bill’ instead of a fully formed question about their bill. Here, the bot does a lot of work to disambiguate and figure out the user’s question. I observed a ‘three strikes’ pattern, wherein the bot needs to clarify the question before the 3rd additional question to keep the user engaged. I also worked with other teams to devise and test a revised prompt to instruct the user on how to form a ‘better’ question from the jump.