The Weather Channel for Smart Speakers

The Weather Channel has long been the leading weather app globally and provides data to all major voice assistants. But, how would the experience translate to a smart speaker and be unique and interesting enough to attract users?

Our solution to date has attracted 100k enablements and a 4.5 star rating ⭐️

“Hey Alexa, ask The Weather Channel: what’s the weather?”

The question above is a mouthful to say. But, answering what otherwise seems to be an easy question is really, really hard.

Weather is a common word, a common subject of small talk, and a common category of information sought from smart speakers, but its meaning can a lot of different things.

Do you mean the weather right now? The weather 4 hours from now? Or the weather next Tuesday?

To further complicate matters, weather information is most often consumed digitally and most frequently on apps, where the user has the benefit of graphic displays, infographics, and even radar to self-select important data elements and self-construct the weather story they need in order to go about their lives.

Right now, in Atlanta, Georgia, it’s 68 degrees and mostly cloudy. Later, expect a high temperature of 80 degrees and a low of 63. Chance of rain is 0%.

User-centered design.

In parallel to market and technical analysis, I set out on a path to understand our user. The process resulted in three core personas. But, since smart speakers were still a relatively new technology, I considered some elements to guide not only 1:1 interviews but design thinking activities to really help me and the team understand how these devices are used generally and where user needs around weather might not be fully met by existing apps.

Who is the smart speaker user?

Working with research, I organized 1:1 user interviews and wrote the research plan, participant selection criteria, the moderator guide, and wrap-up reports. I quickly came to understand user frustrations with the current state of voice assistants and how they make it hard to find and use ‘apps’ on smart speakers. Users made it clear that smart speakers in particular are not personal devices. They inhabit shared spaces. Users want personalized experiences. Some of this is dependent on Amazon or Google to enable, but this finding added a dimension to how I thought about structuring and delivering a credible response.

.

What do they need?

The interviewees represented smart speakers users generally but had some diversity in experience with and expectations on smart speakers. While some users tired of the devices once the novelty wore off and they become 'expensive jukeboxes,’ others incorporated them into daily routines. With regard to weather, there was one clear univeral need state: planning. Users trust the smart speaker to give weather information as they go through the morning routines and plan the day ahead. This learning provided the starting point for design.

What gets them to wow?

Asking users what they expected from the device in 5 years gave us some really interesting and out there answers in terms of specifics. Most followed the path of ambient computing and all-the-time access to their favored voice assistant. This might be years away, so I focused on the content of the potential response and learned from users that:

The app has to provide the basics (i.e. temperatures, chance of rain%), and

The app has to provide something extra that users might need to know but might not know or think to ask about

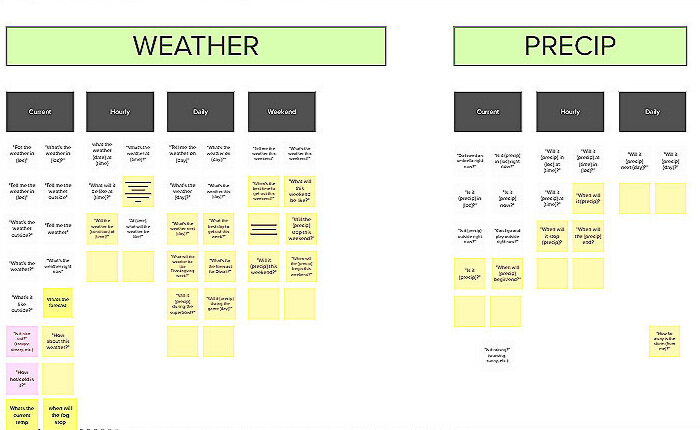

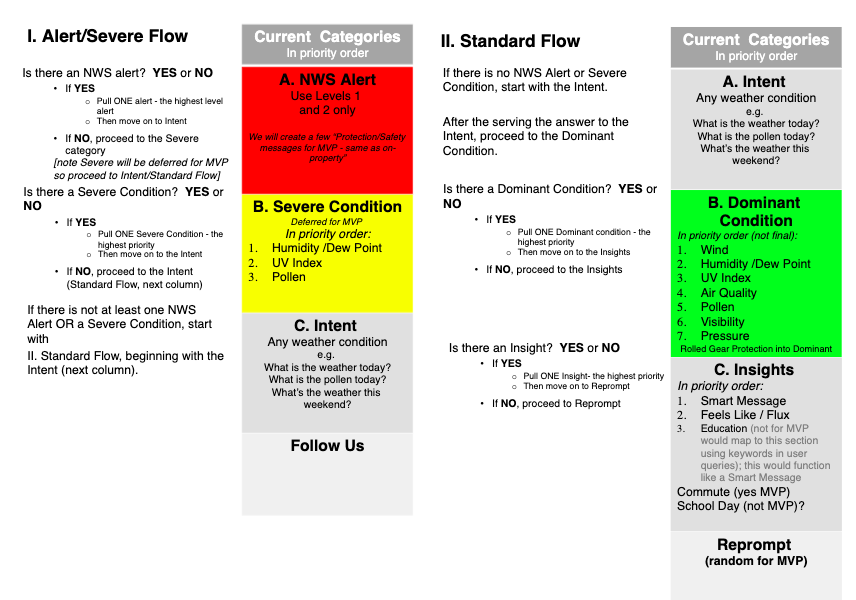

Id intent.

Without a data scientist or linguist on the team, I orchestrated a thorough review of the information architecture of TWC’s digital properties identify topics of conversation. In this case, there were general topics like ‘weather’ and ‘precipitation’ and more specific topics like ‘weather at a specific time today.’ Looking at these general categories and their variations gave us intents and sub-intents so that we could begin exploratory development and begin creating dialogue flows.

Later, we would approach the process of identifying questions differently. Partnering with research, we constructed a simple survey using a Jeopardy model. We gave users an answer and asked them to tell us what question they think they’d ask. So, I was able to review and code results to understand what words a user would use to to train the assistants to handle users questions.

Gone in 20 seconds.

Based on of user research and design thinking outputs, I wrote sample dialogues that covered a variety of weather conditions, including sunny day, rainy day, severe storms, etc.

I got the team together for table reads. Each time, asking a different team member to read the ‘user’ lines and another team member to read Alexa’s lines. I also developed a ‘cue card’ system. For the cue cards, I wanted a system where ‘the user’ couldn’t read ahead and know what the bot was going to say. So, the team member playing the user would look at a cue card and read the user’s line. Another team member would respond as the bot’. Afterward, we discussed what we heard, what was easy to understand, what was hard to understand, what data points stood out, and which ones might be missing.

We also extended this system of table reads to the IBM network as a kind of ‘guerilla’ prototype test. I recruited from across the US IBM network. On a video call, I asked some warm-up questions to understand their habits on weather, smart speakers, and other voice assistants. Then, I pulled out the the cue cards. .

“It takes too long,” was the common response. “It takes longer than it would take me to look at my phone." Participants liked the information and thought it was useful but didn’t’t want to have to have a conversation to get it. They said they liked the note about humidity and that other apps or skills they use didn’t surface any data that might help them understand how the weather might feel

Since a conversational interaction would put off users, I needed a way to satisfy the user need through a command-and-response model. My next round of designs had to condense the information in this dialogue to a single response, hit all the highlights, be read / heard in about 20 seconds, and contain extras like the humidity insight.

The DIY approach

In a way, we had a leg up. Through our relationship with the cable network, our weather sciences and technology teams had developed solutions years ago to make videos that displayed on TV. These updates were created to provide text for 24-hour and 12-hour dayparts that a TTS engine could process and delivery on top of a video. Looking at these, I had some doubts about whether these were a fit for this use case, given what information was or wasn’t included.

So, I made a new one to have a third option. To construct a new response, I looked at the dialogue flow and removed all text surrounding the data points. This left only the data points that come from an API. With only a current temperature, a high temperature, a low temperature, a weather condition (i.e. partly cloudy, rain, sunny, etc.), and a chance of rain, I wrote sample responses for a sunny day and a rainy day. In reviewing the developers and other team members, I learned that a separate API can deliver precipitation accumulation. We added this to the rainy day answer, since it seemed important.

Lastly, I knew we needed something extra to provide value to the user. So, I identified five dominant conditions (humidity, wind, UV, pollen, visibility, that, if too high or low, could alter how the user might feel or plan for their day. These, I asked the developers to add to our messages as insights. I also led the analysis and helped define business rules to rank conditions, since we were likely only going to be able to return one insight per response.

Being Extra.

To get to ‘something extra,’ I deepened my analysis of available data. I looked at all of the API documentation. Through this process, I identified a class of serviceable insights that could be added to our responses. I identified seven ‘dominant conditions,’ that, if present in signficant amounts, would effect how the user might feel or navigate the world on a given day. And, I identified other insight categories like signficant temperature change or ‘flux’ messages that might also important. Then, working with the meteorologists, I analyst the data values for things like UV index to understand what values make it significant and how to communicate that to users and give it context and meaning in terms of a weather report.